Someone set up a bogus Facebook account and posted, without consent, images and video of Plaintiff engaged in a lewd act. Facebook finally deleted the account, but not until two days had passed and Plaintiff had threatened legal action.

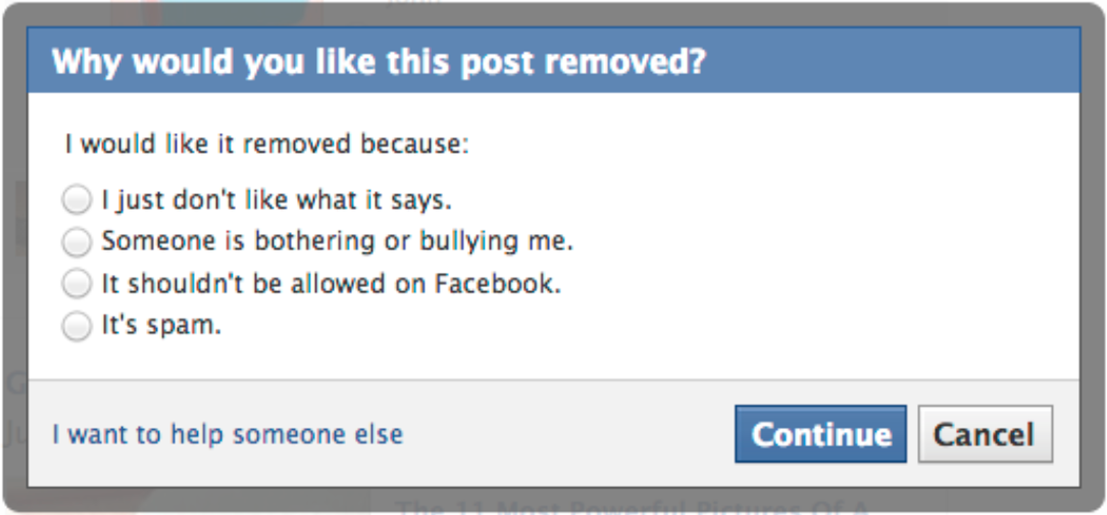

Plaintiff sued anyway, alleging, among other things, intrusion upon seclusion, public disclosure of private facts, and infliction of emotional distress. In his complaint, Plaintiff emphasized language from Facebook’s Terms of Service that prohibited users from posting content or taking any action that “infringes or violates someone else’s rights or otherwise would violate the law.”

Facebook moved to dismiss the claims, making two arguments: (1) that the claims contradicted Facebook’s Terms of Service, and (2) that the claims were barred by the Communications Decency Act at 47 U.S.C. 230. The court granted the motion to dismiss.

It looked to the following provision from Facebook’s Terms of Service:

Although we provide rules for user conduct, we do not control or direct users’ actions on Facebook and are not responsible for the content or information users transmit or share on Facebook. We are not responsible for any offensive, inappropriate, obscene, unlawful or otherwise objectionable content or information you may encounter on Facebook. We are not responsible for the conduct, whether online or offline, of any user of Facebook.

The court also examined the following language from the Terms of Service:

We try to keep Facebook up, bug-free, and safe, but you use it at your own risk. We are providing Facebook as is without any express or implied warranties including, but not limited to, implied warranties of merchantability, fitness for a particular purpose, and non-infringement. We do not guarantee that Facebook will always be safe, secure or error-free or that Facebook will always function without disruptions, delays or imperfections. Facebook is not responsible for the actions, content, information, or data of third parties, and you release us, our directors, officers, employees, and agents from any claims and damages, known and unknown, arising out of or in any way connected with any claims you have against any such third parties.

The court found that by looking to the Terms of Service to support his claims against Facebook, Plaintiff could not likewise disavow those portions of the Terms of Service which did not support his case. Because the Terms of Service said, among other things, that Facebook was not responsible for the content of what its users post, and that the a user uses the service as his or her on risk, the court could not place the responsibility onto Facebook for the offensive content.

Moreover, the court held that the Communications Decency Act shielded Facebook from liability. The CDA immunizes providers of interactive computer services against liability arising from content created by third parties. The court found that Facebook was an interactive computer service as contemplated under the CDA, the information for which Plaintiff sought to hold Facebook liable was information provided by another information content provider, and the complaint sought to hold Facebook as the publisher or speaker of that information.

Caraccioli v. Facebook, 2016 WL 859863 (N.D. Cal., March 7, 2016)

About the Author: Evan Brown is a Chicago attorney advising enterprises on important aspects of technology law, including software development, technology and content licensing, and general privacy issues.